Because software is software, it all gets thrown out every now and again for….. reasons. BlueZ, on linux, has moved away from hciconfig and hcitool and gattttool and so on, and it’s all “bluetoothctl” and “btmgmt” See https://wiki.archlinux.org/title/Bluetooth#Deprecated_BlueZ_tools for some more on that.

Anyway, I got some of these really cheap Xiaomi BTLE temperature+humidity meters. Turns out there’s a custom firmware you can flash to them and all sorts, but really, I was just interested in some basic usage, and also, as I’m getting my toes wet in bluetooth development, working out how to just plain use them as intended! (well, mostly, I’ve no intention of using any Xiaomi cloud apps)

This application claims to use the standard interface, and it’s “documentation” of this interface is https://github.com/JsBergbau/MiTemperature2#more-info and the little bit of code at

def connect():

#print("Interface: " + str(args.interface))

p = btle.Peripheral(adress,iface=args.interface)

val=b'\x01\x00'

p.writeCharacteristic(0x0038,val,True) #enable notifications of Temperature, Humidity and Battery voltage

p.writeCharacteristic(0x0046,b'\xf4\x01\x00',True)

p.withDelegate(MyDelegate("abc"))

return p

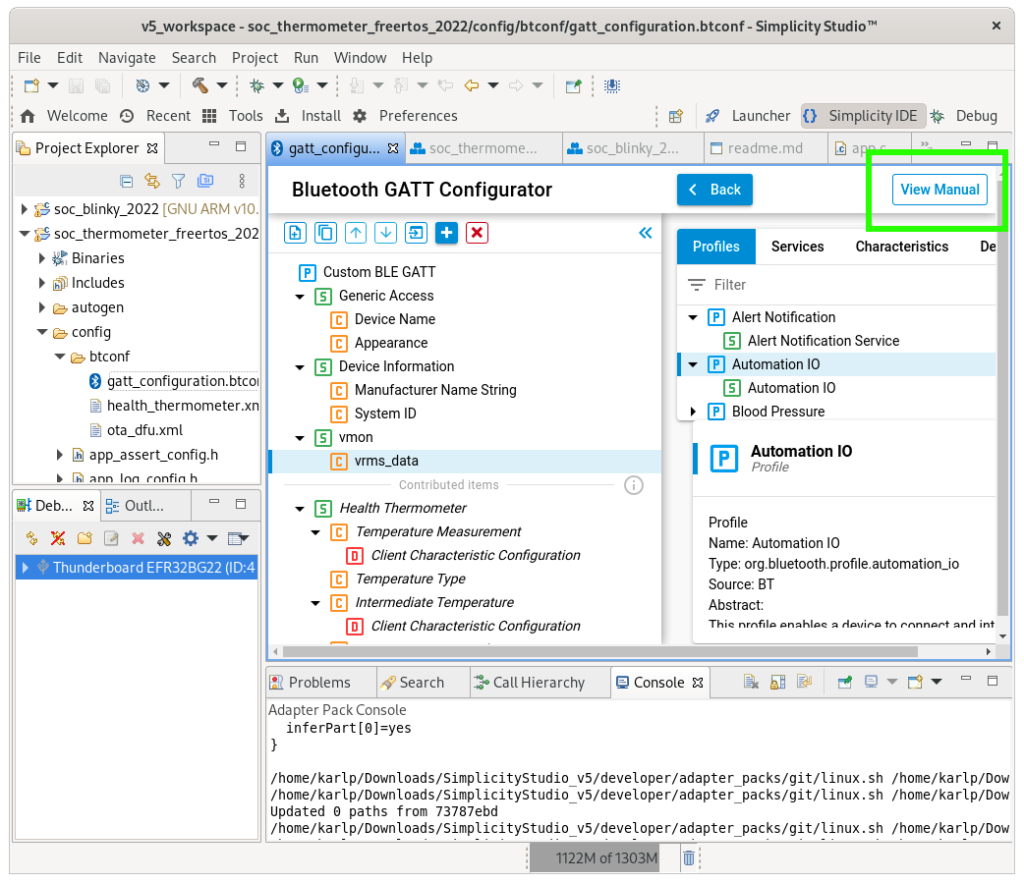

Well, that wasn’t very helpful. Using tools like NrfConnect, I’m expecting things like a service UUID and a characteristic UUID and things. Also, I don’t have gatttool to even try it like this :)

So, how to do it in “modern” bluetoothctl? Here’s the cheatsheet. First, find our meters…

$ bluetoothctl

# menu scan

# clear

# transport le

# back

# scan on

SetDiscoveryFilter success

Discovery started

[CHG] Controller <your hci mac> Discovering: yes

[NEW] Device <a meter mac> LYWSD03MMC

[NEW] Device <other devices> <other ids>

# scan off

Now, we need to connect to it. According to https://github.com/custom-components/ble_monitor#supported-sensors it actually does broadcast the details about once every 10 minutes, which is neat, but you need a key for it, and aintnobodygottimeforthat.gif.

# connect <mac address>

lots and lots of spam like

[NEW] Descriptor (Handle 0x9f94)

/org/bluez/hci0/dev_MAC_ADDRESS/service0060/char0061/desc0063

00002901-0000-1000-8000-00805f9b34fb

Characteristic User Description

[NEW] Descriptor (Handle 0xa434)

/org/bluez/hci0/dev_MAC_ADDRESS/service0060/char0061/desc0064

00002902-0000-1000-8000-00805f9b34fb

Client Characteristic Configuration

[NEW] Characteristic (Handle 0xa904)

/org/bluez/hci0/dev_MAC_ADDRESS/service0060/char0065

00000102-0065-6c62-2e74-6f696d2e696d

Vendor specific

[NEW] Descriptor (Handle 0xb1d4)

/org/bluez/hci0/dev_MAC_ADDRESS/service0060/char0065/desc0067

00002901-0000-1000-8000-00805f9b34fb

Characteristic User Description

....more lines like this.

[CHG] Device MAC_ADDRESS UUIDs: 00000100-0065-6c62-2e74-6f696d2e696d

[CHG] Device MAC_ADDRESS UUIDs: 00001800-0000-1000-8000-00805f9b34fb

[CHG] Device MAC_ADDRESS UUIDs: 00001801-0000-1000-8000-00805f9b34fb

[CHG] Device MAC_ADDRESS UUIDs: 0000180a-0000-1000-8000-00805f9b34fb

[CHG] Device MAC_ADDRESS UUIDs: 0000180f-0000-1000-8000-00805f9b34fb

[CHG] Device MAC_ADDRESS UUIDs: 0000fe95-0000-1000-8000-00805f9b34fb

[CHG] Device MAC_ADDRESS UUIDs: 00010203-0405-0607-0809-0a0b0c0d1912

[CHG] Device MAC_ADDRESS UUIDs: ebe0ccb0-7a0a-4b0c-8a1a-6ff2997da3a6

[CHG] Device MAC_ADDRESS ServicesResolved: yes

The characteristic you need is ebe0ccc1-7a0a-4b0c-8a1a-6ff2997da3a6, under the similar service UUID. So now, we just “subscribe” to notifications from it.

# menu gatt

[LYWSD03MMC]# select-attribute ebe0ccc1-7a0a-4b0c-8a1a-6ff2997da3a6

[LYWSD03MMC:/service0021/char0035]# notify on

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Notifying: yes

Notify started

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

36 09 2a f6 0b 6.*..

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRRESS/service0021/char0035 Value:

35 09 2a f6 0b 5.*..

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

33 09 2a f6 0b 3.*..

[LYWSD03MMC:/service0021/char0035]# notify off

And…. now we can go back to https://github.com/JsBergbau/MiTemperature2#more-info and decode these readings.

>>> x = "2a 09 2a 4e 0c"

>>> struct.unpack("<HbH",binascii.unhexlify((x).replace(" ","")))

(2346, 42, 3150)

With Python, that gives us a temperature of 23.46°C, humidity of 42%, and a battery level of 3.150V.

Yay.

Originally, I was blind to the subtle differences in the characteristic UUIDs, and thought I had to hand iterate them. The below text at least shows how you can do that, but it’s unnecessary.

Ok, now the nasty bit. We want to get notifications from one of the characteristics on service UUID ebe0ccb0-7a0a-4b0c-8a1a-6ff2997da3a6. But you can’t just “select” this, because, at least as far as I can tell, there’s no way of saying _which_ descriptor you want.

So, first you need to use scroll that big dump of descriptors (or view it again with list-attributes in the gatt menu) to work out the “locally assigned service handle” In my case it’s 0021, but I’ve got zero faith that’s a stable number. You figure it from a line like this…

[NEW] Characteristic (Handle 0xd944)

/org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0048

ebe0ccd9-7a0a-4b0c-8a1a-6ff2997da3a6

Vendor specific

Now, which characteristic is which? Well, thankfully, Xiaomi uses the lovely Characteristic User Description in their descriptors, so you just go through them one by one, “read”ing them, until you get to the right one…

[LYWSD03MMC:]# select-attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035/desc0037

[LYWSD03MMC:/service0021/char0035/desc0037]# read

Attempting to read /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035/desc0037

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035/desc0037 Value:

54 65 6d 70 65 72 61 74 75 72 65 20 61 6e 64 20 Temperature and

48 75 6d 69 64 69 74 79 00 Humidity.

54 65 6d 70 65 72 61 74 75 72 65 20 61 6e 64 20 Temperature and

48 75 6d 69 64 69 74 79 00 Humidity.

[LYWSD03MMC:/service0021/char0035/desc0037]#

The sucky bit, is you need to manually select _each_ of the “descNNNN” underneath _each_ of the “charNNNN” under this service. (On my devices, it’s the second characteristic with dual descriptors…)

now, select the characteristic itself. (you can’t get notifications on the descriptor…)

[LYWSD03MMC:/service0021/char0035/desc0037]# select-attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035

[LYWSD03MMC:/service0021/char0035]# notify on

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Notifying: yes

Notify started

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

2b 09 2a 4e 0c +.*N.

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

2a 09 2a 4e 0c *.*N.

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

2b 09 2a 4e 0c +.*N.

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

2b 09 2a 4e 0c +.*N.

[CHG] Attribute /org/bluez/hci0/dev_MAC_ADDRESS/service0021/char0035 Value:

29 09 2a 4e 0c ).*N.

[LYWSD03MMC:/service0021/char0035]# notify off